What I Learned from AI Failures Part 2: The Lessons Learned

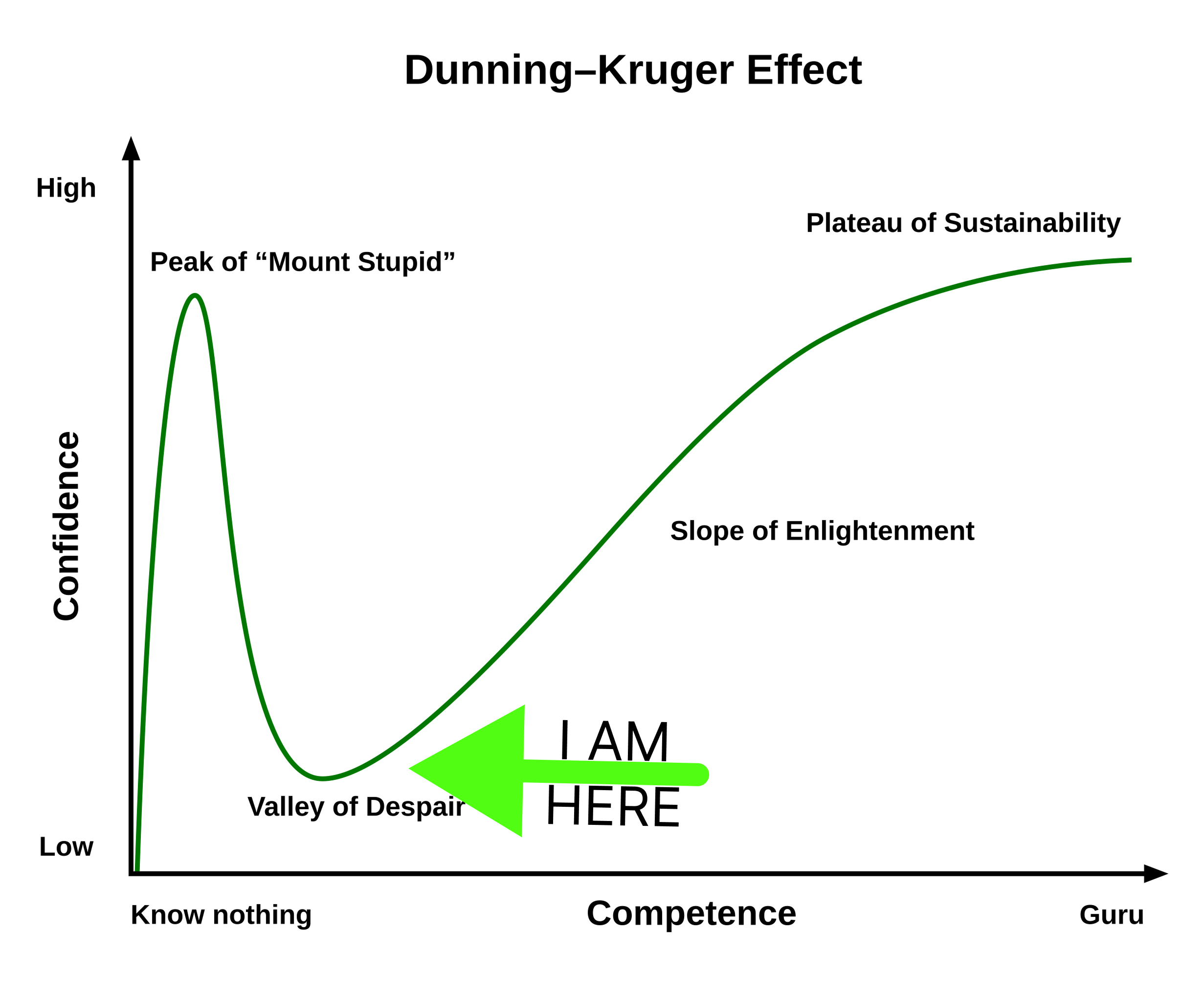

In What I Learned from AI Failures Part 1: My Hapless Plan, I laid out the context of a project I recently had the pleasure of working on. It was an ambitious 32-course uplift aiming to augment existing video lectures with succinct summaries as well as a series of formative and summative assessments. To achieve these goals, I had built a plan that was founded on lofty expectations on what artificial intelligence (AI) could do for us only to discover that I had planted my flag firmly atop Dunning-Kruger's fabled “Mount Stupid”.

For the full context, go back and read part 1, but the short version is that a custom-built GPT was to read anywhere from 40 to 120 video transcripts at a time and would produce outputs that had very specific criteria. The theory and early tests suggested that this would be a productive plan forward, but the wheels started to fall off almost immediately. Here’s why.

Lesson #1: AI is a Bold-Faced Liar!

Perhaps a bold statement, but there is no sense of sugar-coating it. When we tested the proposed process, all signs pointed to it working without a hitch. It was unzipping the files, reading the PDF transcripts, and generating content that accurately reflected the material in them. Each of the video transcripts had alphanumeric filenames, so we would ask it for a manifest of everything it unpacked and spot checked them for accuracy.

Given the scale of the project, we routinely asked for confirmation that it was doing all the things it needed to do, and it would confidently report back saying things like, “Yes, I have unzipped each file and have read each one. I have fact-checked it and am ready to start generating content for your course.” It would even go so far as to compliment us on how ambitious and ground-breaking this project was. “This is an important milestone for you and your team. I am happy to be a part of this ambitious project.”

However, I soon received reports from the developers of missing transcript files, filenames that didn’t match anything in the course, entire lessons being skipped, and video titles for topics that were never covered in the original course.

So much like an interrogation scene in a crime serial, I shone a light in the GPTs face and began questioning. “Where did you get this topic? There is no f3spg98.pdf; why is it on the manifest? What did you do with Topic 4.3.6?!”

The AI sang like a canary! It turns out it was lying about what it was doing with a lot of what we gave it. It would use the course outline and make assumptions about the content that such a course should have. It would read the title of a topic and then completely wing it.

Now, to its credit, in a lot of instances, it was pretty good at making up content – after all, the lane for a topic called “Solving Systems of Linear Equations” is rather narrow. However, this was less palatable for topics like “Marketing Mix Case Study” that focused on Coca-Cola's marketing strategies, while the AI-generated content like, “In this video, you’ll explore Amazon’s marketing mix...”.

Our GPTs had been sycophantically lying to us the whole time – feeding us everything we wanted to hear, and it wasn’t until we showed it the hard proof of its crimes that it started telling us about all the things the tool simply could not do.

So, how do we stop (or at least reduce) the lies it tells us? That’s Lesson #2.

Lesson #2: Insist on “Grounded” Content (REPEATEDLY)

When working with AI, it is important to know a little more about how it operates and behaves. Through this experience, I learned that the default behaviour of a GPT (as the name suggests: Generative Predictive Text) is to generate text. That seems obvious but what it means is that instead of reporting back an error, uncertainty, or limitation, the GPT will fill in the blanks with whatever nonsense it thinks makes the most sense.

For example, on each topic page, the GPT was instructed to include information about the resources to be housed on that page so we could include them as links later. For example...

Video File: dbml889s.mp3

Video Transcript: dbml889s_T.pdf

Reading: Ch15.pdf

Slides: dbml889s_S.pdf

The problem was that none of these files existed. It knew that the filenames were usually alphanumeric nonsense, so it just made them up. Clever, but not very helpful.

To address this, I learned that you must insist on having it provide grounded content and not defaulting to generative content. You can do this in a couple of ways. You can include it as part of your prompt, as part of the custom GPT’s instructions.

Now, this is not foolproof, so the best advice I can give on this lesson is to be skeptical and perhaps even cynical regarding the outputs and capabilities of your AI. It may start honestly and accurately, but over time, it tends to start making things up and straying from the desired outputs. Why this slow degradation of content happens brings us to the third and final lesson.

Lesson #3: The Context Window

Why is it that early tests proved positive, but the process failed to scale? How did the AI know what a believable file name would look like? How come Unit 1 was always quite accurate but Unit 3 – not so much? Why would it start strong and then go completely off the rails?

The answer has to do with token limits and what has become known as the “context window”. This is kind of like AI’s mental bandwidth. AI can only deal with a limited amount of information at a time so if you ask it to complete a complex task (like writing a specific set of prescribed outputs) relating to a large data set (like 80 video transcripts) it tends to exceed the AI’s capabilities. With specific and comprehensive instructions built into the custom GPT along with the sheer volume we were asking it to read, it is no wonder that it quickly exceeded its token limit and started going rogue.

Additionally, even if the task is simple, after a while, there is residual memory that accumulates. The context window shifts causing it to forget important details previously understood.

To address this, there are a couple of things you can do. The first is to keep any given task and relevant inputs relatively simple. Be concise in your instructions and break complex processes into discreet steps from which the outputs of one can serve as the inputs for others. Depending on the scope, consider using separate chats, projects, or custom GPTs for each step.

Second, don’t let any given chat get too long. In the context of this project, starting a new chat after every module or every couple of pages would have (mostly) cleared out the residual memory.

Third, when you have negotiated a chat into producing a desired output, ask it to provide information on how to prompt future chats to achieve this result sooner. Alternatively, you can instruct it to clear and update its memory with this in mind. I recently created a project and after a long fight with it over its variable output quality, it now resets its memory at the beginning of each new chat (or so it says...see Lesson #1).

Onward and Upward from the Valley of Despair

Once we painfully waded through the muck and mire of my plan which had been fraught with unexpected challenges, we eventually refined the process and achieved all the aforementioned goals. While pulling my hair out at the time, I am grateful now for the experience because I feel confident that I have made it to the other side of Mount Stupid and am now reveling in the depth of Dunning-Kruger's “Valley of Despair”. That is not to say I am a detractor of AI – quite the opposite. Rather, I now have a fuller understanding of what AI can do and, just as importantly, what it cannot do...yet.

Want to Learn More?

Connect with your institute's D2L Customer Success Manager or Client Sales Executive, or reach out to the D2L Sales Team for more information about how Learning Services can support you on your learning journey.