What I Learned from AI Failures Part 1: My Hapless Plan

If you’re an educator, you know that failure is not something to be feared but rather one of the most powerful teaching methods around. You also know that artificial intelligence (AI) is not just a wave that has been washing our shores but a presence as sure as the tide. With all the outstanding successes and advancements AI has provided in the past few years, I want to tell you about some miserable failures and what I learned from them.

The Context

I recently had the opportunity to work on a project in which we aimed to push the boundaries of what AI could do within the context of a large-scale course uplift. We set out to redesign 32 full college-level courses ranging from Calculus, Spanish, Business Law, Macroeconomics, Interpreting Literature, and beyond. Each was budgeted to be completed in approximately 16 hours per course.

Call it hubris, reckless ambition, or plain old ignorance; whatever you call it, I was sure AI would allow my colleagues and I to soar to previously unthinkable heights with this project. But it did not take long for these wax wings to melt, sending me hurdling back down to earth.

For clarity, on the D2L Learning Services team, the term course uplift is used to describe a project in which we take existing course material and redesign it with a select set of goals in mind. Typically, it may mean transforming SME-written content into accessible learning material, a scaffolded assessment strategy, gamified interactives, and more. In this instance, we were starting with a collection of lecture videos 5 to 30 minutes in length, some open educational resource (OER) readings, a handful of slides, a few practice quizzes, and a final exam.

Our goals for this uplift, simply stated, were as follows:

- Apply a newly custom-branded HTML (v5) Template to each topic page in the course.

- Write introductory content to provide the learner with context before each lecture video. This would sit at the top of each page before the video.

- Add an interactive knowledge check to the bottom of each page just below each video.

- Create a Question Library for each Unit from which formative and summative assessments would be created. Each library would have more than enough questions so that the learners could make multiple attempts as needed and receive new questions each time.

The Plan

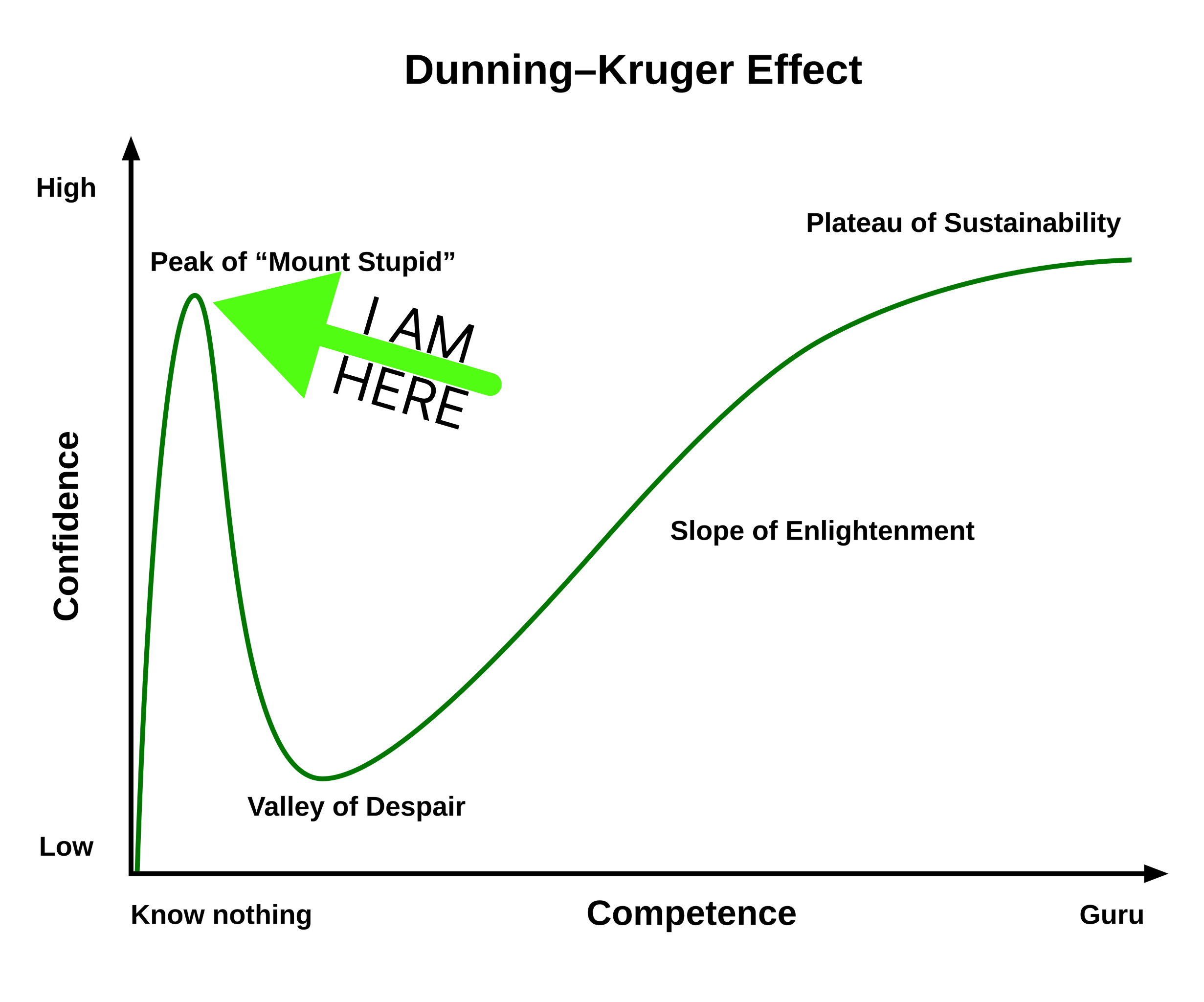

If you've ever been there (and we all have) it can be difficult to know when you’re on the top of Dunning-Kruger's Mount Stupid – the peak of the competence-confidence graph in which learning a little about a topic makes you think you’re an expert.

I experimented with custom GPTs and worked with our internal AI Working Group, both with modest successes. I had studied prompt engineering and how LLMs work so I was not going into this completely blindfolded. However, as with anyone ignorantly on the top of Mount Stupid, I didn’t know what I didn’t know.

So the plan was to take all of the video transcripts (which numbered anywhere from 40 to 120 documents depending on the course) and feed them as a .zip file into a custom GPT that had been trained to unpack the files, read them, match them with associated resources, and generate a prescribed list of outputs for which this custom GPT had been exhaustively trained. Then, we would use a separate custom GPT to generate anywhere from 30 to 100 quiz questions at a time that would be added to the Question Library in Sections that aligned with the courses’ unit structure.

At this point, I encourage you to pause and consider what flaws you see in this plan – there are a few. Also, for your sake, dear reader, I will conclude this article here and provide the insights I gained from this harrowing experience in What I Learned from AI Failures Part 2: The Lessons Learned.

Want to Learn More?

Connect with your institute's D2L Customer Success Manager or Client Sales Executive, or reach out to the D2L Sales Team for more information about how Learning Services can support you on your learning journey.